Alex L. Zhang | Recursive Language Models

Key Takeaways

- Introduction of Recursive Language Models (RLMs) as an inference strategy for handling unbounded context/output lengths.

- RLMs mitigate 'context rot' by allowing LLMs to recursively interact with their context stored in an environment (e.g., a Python REPL).

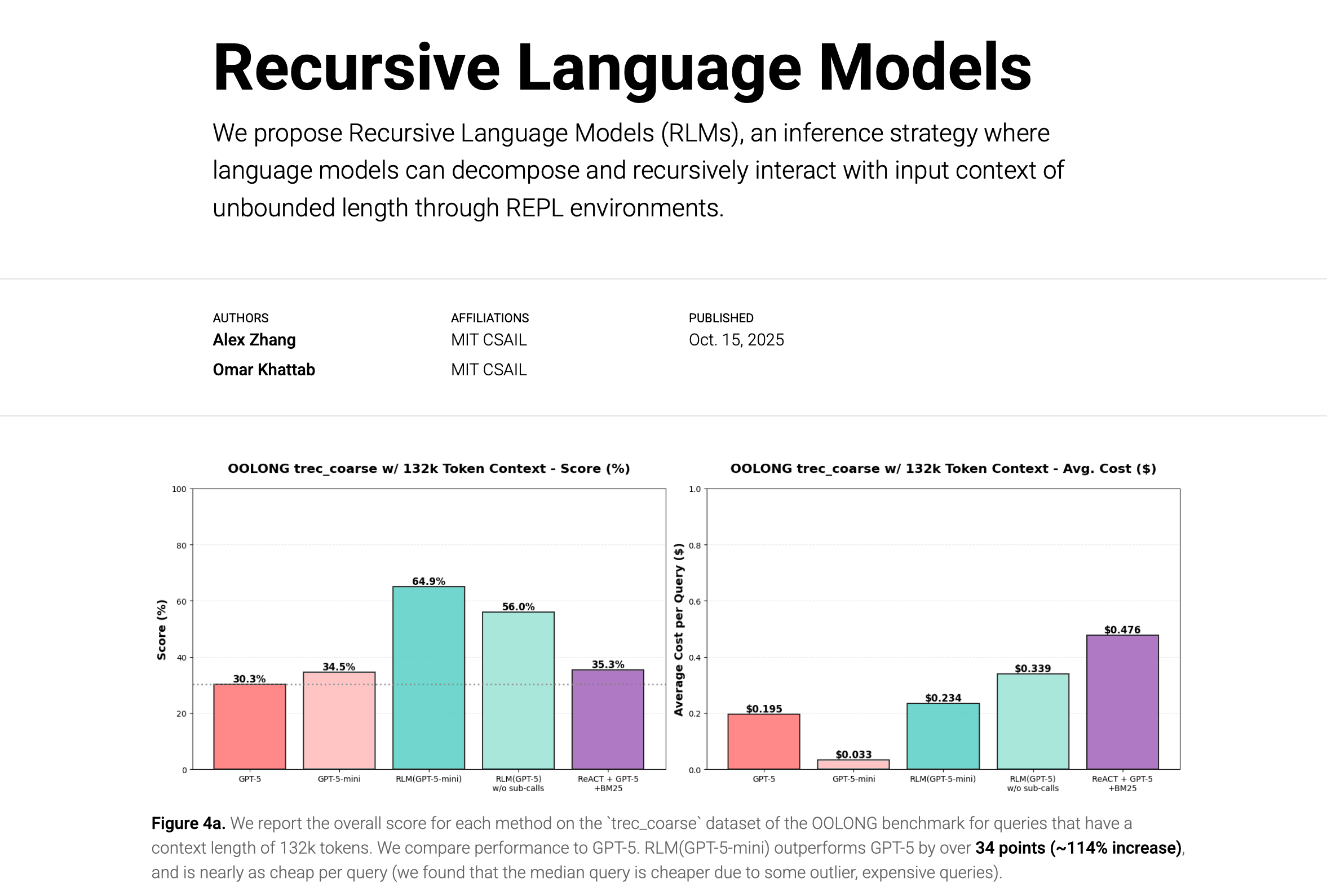

- An RLM using GPT-5-mini significantly outperformed standard GPT-5 on the OOLONG long-context benchmark while being cheaper per query.

- RLMs surpassed other methods like ReAct + indexing on a new Deep Research task and maintained performance with over 10M tokens.

- The authors predict RLMs will be the next significant milestone in general-purpose inference scaling after Chain-of-Thought and ReAct models.

The research introduces Recursive Language Models (RLMs), a novel inference strategy designed to enable language models to process essentially unbounded input and output context lengths while actively mitigating 'context rot,' the phenomenon where recall degrades as context grows. RLMs function as a thin wrapper around an LLM, allowing it to spawn recursive calls for intermediate computation, effectively treating the user's prompt context as a variable stored in an environment like a Python REPL. A specific implementation using GPT-5-mini demonstrated superior results, achieving more than double the correct answers compared to GPT-5 on the difficult OOLONG long-context benchmark, all while being more cost-effective per query. The RLMs also outperformed other advanced techniques, such as ReAct plus test-time indexing, on a newly constructed Deep Research task derived from BrowseComp-Plus. Crucially, the models showed no performance degradation even when tested with inference times involving 10 million or more tokens. The authors suggest that RLMs, especially those explicitly trained for recursive reasoning, are poised to become the next major paradigm shift in general-purpose inference scaling, following CoT and ReAct models.