Which Table Format Do LLMs Understand Best? (Results for 11 Formats)

Key Takeaways

- The format used to pass tabular data to LLMs significantly impacts AI system accuracy, crucial for data pipelines and cost management.

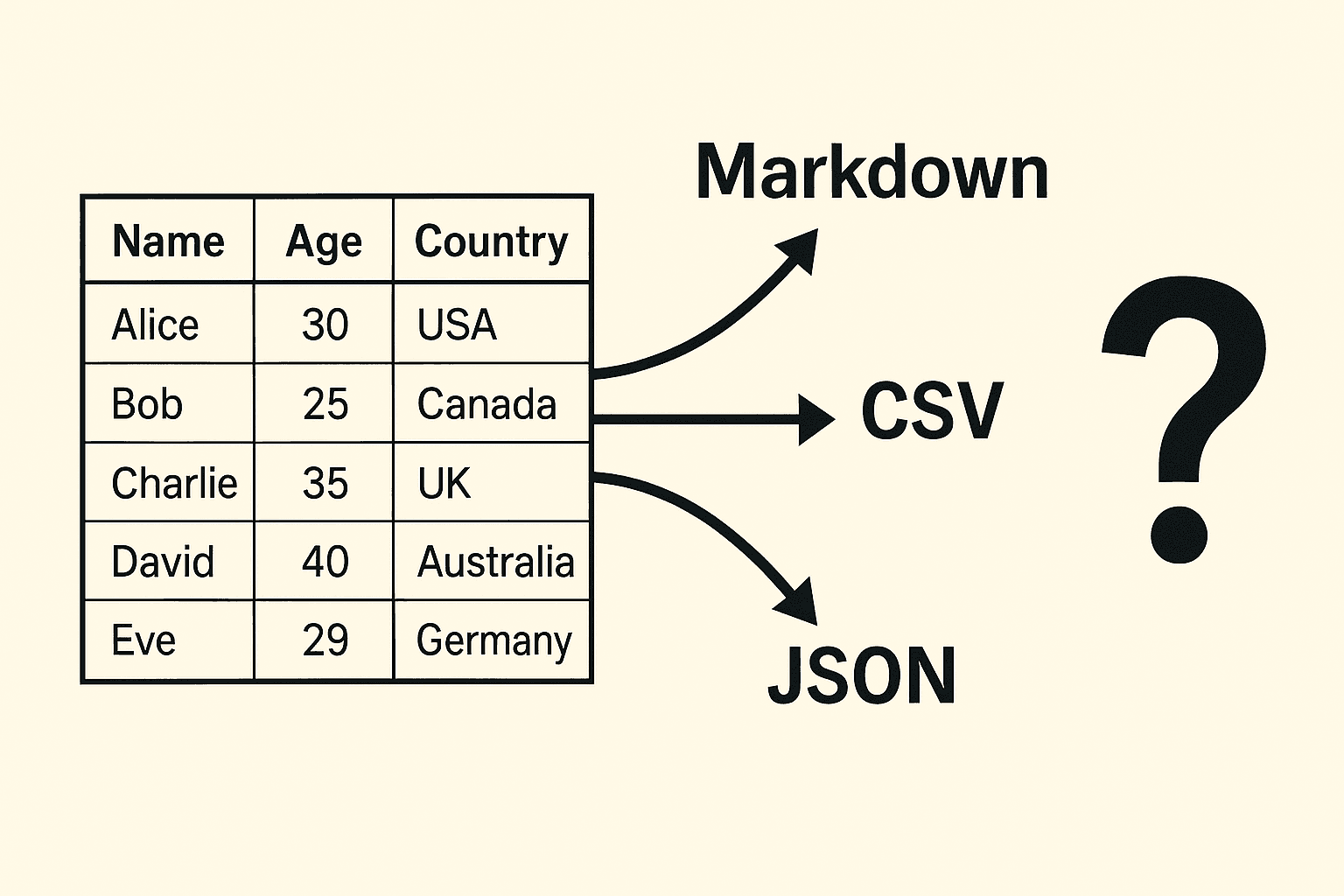

- An experiment tested 11 data formats (including Markdown-KV, JSON, CSV, XML) using 1000 records and 1000 queries against the GPT-4.1-nano model.

- The Markdown-KV format achieved the highest accuracy (60.7%), while CSV and JSONL performed poorly (44.3% and 45.0% respectively).

- There is a direct trade-off between accuracy and token usage, as the top-performing Markdown-KV format used 2.7 times more tokens than the most efficient format, CSV.

- The methodology suggests repeating headers in formats like CSV or HTML tables every 100 records can aid LLM understanding in real-world, large-scale applications.

The optimal format for presenting tabular data to Large Language Models (LLMs) is a critical, yet often overlooked, factor in the reliability of AI systems used for data analysis and decision-making. Understanding format sensitivity is crucial for structuring data workflows, optimizing performance, and managing the costs associated with token usage. A controlled experiment was designed to rigorously test this, involving passing 1000 records of synthetic employee data to the GPT-4.1-nano model across 11 distinct data representation formats to answer 1000 randomized questions. The findings revealed substantial variation in LLM comprehension, with the custom Markdown-KV format achieving the highest accuracy at 60.7%, placing it about 16 points ahead of the lowest performer, CSV. Conversely, the top-performing Markdown-KV format consumed 2.7 times more tokens than the most token-efficient format, CSV, highlighting a trade-off between accuracy and cost. The study suggests that organizations currently relying on formats like CSV or JSONL for structured data ingestion might see quick accuracy improvements by switching to better-suited representations.