Generalized Orders of Magnitude for Scalable, Parallel, High-Dynamic-Range Computation

Key Takeaways

- Introduction of Generalized Orders of Magnitude (GOOMs) to solve numerical underflow/overflow in compounding real numbers.

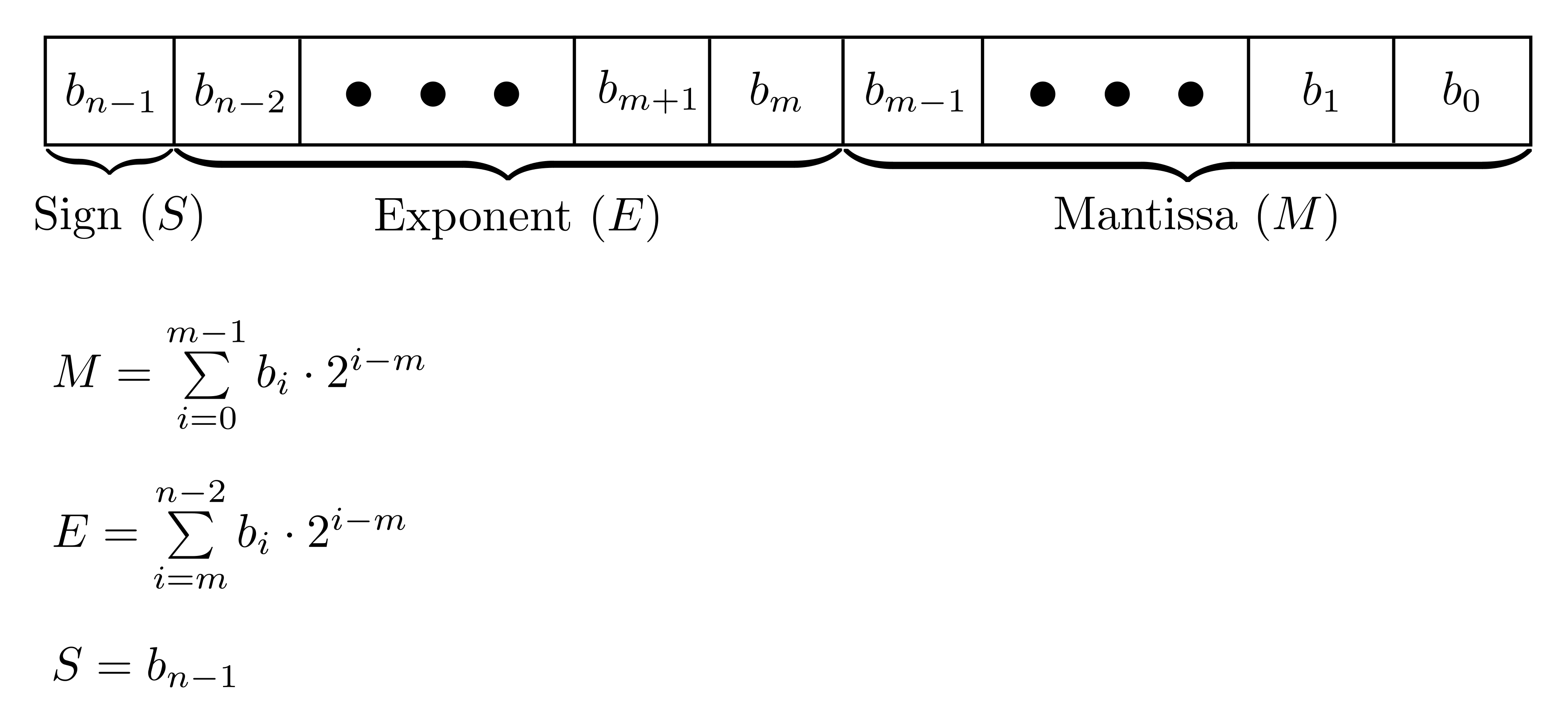

- GOOMs are a principled extension of traditional orders of magnitude, incorporating floating-point numbers as a special case.

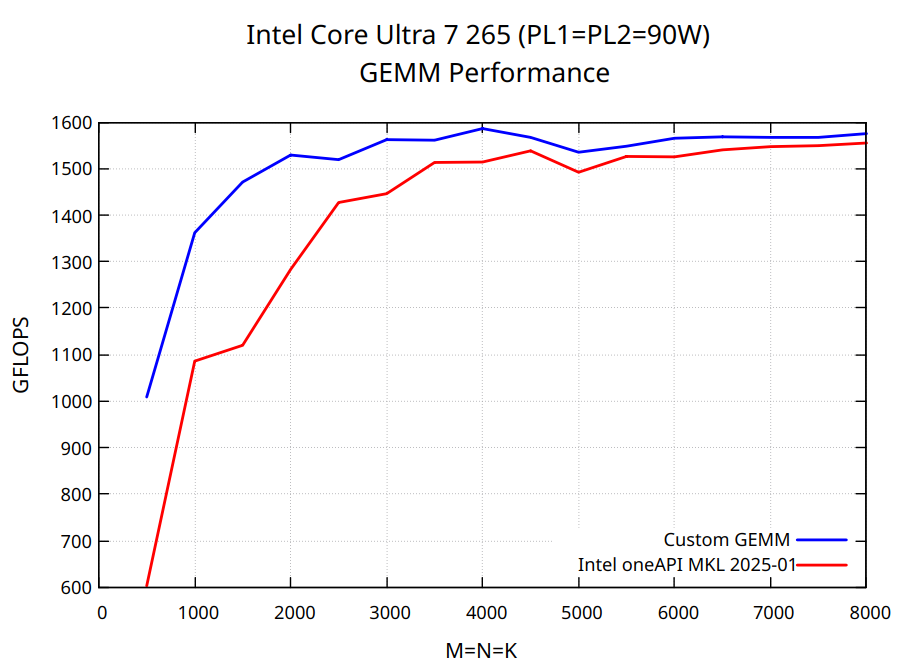

- The implementation utilizes an efficient custom parallel prefix scan for native execution on parallel hardware like GPUs.

- GOOMs enable stable computation over significantly larger dynamic ranges than previously possible.

- Demonstrated superior performance in three applications: large-scale matrix product compounding, fast parallel Lyapunov exponent estimation, and stable deep RNN dependency capture.

Many critical domains, including deep learning and finance, frequently require compounding real numbers over long sequences, which often results in catastrophic numerical underflow or overflow issues. To address this, the authors present Generalized Orders of Magnitude (GOOMs), a principled extension of standard orders of magnitude that treats floating-point numbers as a specific instance. This new method practically allows for stable computation across significantly larger dynamic ranges of real numbers than previously achievable. The researchers implemented GOOMs alongside an efficient custom parallel prefix scan to ensure native and effective execution on parallel hardware, such as GPUs. Three representative experiments demonstrated that the GOOMs implementation surpasses traditional approaches, making previously impractical or impossible tasks feasible, including compounding matrix products beyond standard limits and rapidly estimating Lyapunov exponents in parallel. Furthermore, GOOMs enabled the stable, parallel computation of long-range dependencies in deep recurrent neural networks without needing stabilization techniques, proving to be a scalable and numerically robust alternative for high-dynamic-range applications.